JAVA使用idea远程连接并操作Hadoop分布式存储HDFS

来源:未知 时间:2018-18-23 浏览次数:492次

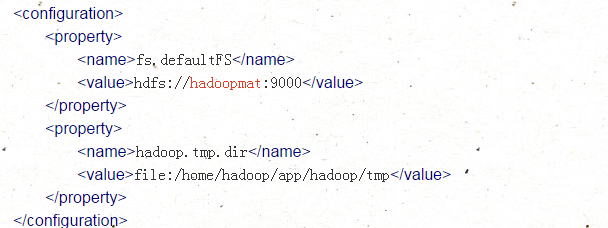

JAVA使用idea远程连接并操作Hadoop分布式存储HDFS,需要安装hadoop cdh 版本,apache版本的hadoop如果远程连接需要编译bin文件比较麻烦,安装完cdh版本的hadoop后需要配置允许远程访问具体如下

使用java链接hdfs须配置为0.0.0.0 hadoopmat,并且本地配置windows系统的host文件111.111.11.11 hadoopmat

一、初始化添加maven依赖

一、初始化添加maven依赖

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0-cdh5.13.0</version>

</dependency>

二、初始化hdfs连接获得FileSystem对象

public static final String HDFS_PATH="hdfs://hadoopmat:9000"; private Configuration configuration; private FileSystem fileSystem; @Before public void function_before() throws URISyntaxException, IOException, InterruptedException { configuration = new Configuration(); fileSystem = FileSystem.get(new URI(HDFS_PATH),configuration,"root"); } 三、JAVA对hdfs的文件操作

/** * 在HDFS上创建一个目录 * @throws Exception */ @Test public void testMkdirs()throws Exception{ fileSystem.mkdirs(new Path("/springhdfs/test")); } /* * 查看目录 */ @Test public void testLSR() throws IOException { Path path = new Path("/"); FileStatus fileStatus = fileSystem.getFileStatus(path); System.out.println("*************************************"); System.out.println("文件根目录: "+fileStatus.getPath()); System.out.println("文件目录为:"); for(FileStatus fs : fileSystem.listStatus(path)){ System.out.println(fs.getPath()); } } /* * 上传文件 */ @Test public void upload() throws Exception{ Path srcPath = new Path("F:/hadooptst/hadoop.txt"); Path dstPath = new Path("/springhdfs/test"); fileSystem.copyFromLocalFile(false, srcPath, dstPath); fileSystem.close(); System.out.println("*************************************"); System.out.println("上传成功!"); } /* * 下载文件 */ @Test public void download() throws Exception{ InputStream in = fileSystem.open(new Path("/springhdfs/test/hadoop.txt")); OutputStream out = new FileOutputStream("E://hadoop.txt"); IOUtils.copyBytes(in, out, 4096, true); } /* * 删除文件 */ @Test public void delete() throws Exception{ Path path = new Path("/springhdfs/test/hadoop.txt"); fileSystem.delete(path,true); System.out.println("*************************************"); System.out.println("删除成功!"); } /* * 浏览文件内容 */ @Test public void look() throws Exception{ Path path = new Path("/springhdfs/test/hadoop.txt"); FSDataInputStream fsDataInputStream = fileSystem.open(path); System.out.println("*************************************"); System.out.println("浏览文件:"); int c; while((c = fsDataInputStream.read()) != -1){ System.out.print((char)c); } fsDataInputStream.close(); }

- 上一篇: 数据仓库管理与全链路数据体系

- 下一篇: 三维网格物体识别技术

扫一扫

扫一扫